Modern data architectures are under pressure from two opposing forces: the need for real-time responsiveness and the demand for stricter governance, correctness, and cost control. Organizations must detect anomalies instantly, personalize experiences in motion, and automate decisions as events happen—while still producing certified, auditable data.

This tension has pushed streaming vs batch processing back to the center of enterprise data strategy. The streaming vs batch processing debate is no longer theoretical. It appears in platform cost overruns, unstable dashboards, delayed analytics, and systems that are technically fast but operationally fragile. Many companies adopt streaming architectures expecting immediate business value—often encouraged by cloud-native and event-driven patterns described in modern platform documentation—only to discover that real-time systems introduce new failure modes and data trust challenges.

At the same time, batch processing alone is insufficient for use cases where delayed data translates into risk, missed opportunity, or degraded experience. As distributed systems and APIs become standard, the limits of purely scheduled processing grow more visible.

Choosing between streaming vs batch processing is therefore a foundational architecture decision. It affects latency, reliability, correctness, scalability, and operational responsibility. Major cloud providers explicitly distinguish streaming vs batch processing because the tradeoffs shape cost models and system ownership.

In this article, we examine streaming vs batch processing from an enterprise perspective: what each model means in practice, where each excels, where each breaks down, and how successful organizations combine both into sustainable hybrid architectures.

At scale, streaming vs batch processing is less a tooling debate than a decision about operating model and data trust.

Streaming vs Batch Processing: What They Really Mean in Modern Data Systems

The operational definition (not the marketing one)

Streaming vs batch processing is often described as a simple speed comparison, but in enterprise systems the distinction is architectural. Batch processing works on bounded datasets: data is collected over a defined window and processed as a discrete job. Streaming processing operates on unbounded data, where events continuously arrive and must be handled without a natural stopping point.

In batch processing, outputs are typically versioned, validated, and published after execution completes. In streaming processing, outputs evolve as new events arrive, and the system must manage state, ordering, and failure recovery continuously. This is why modern stream processing engines are defined as continuous computation systems rather than scheduled jobs.

Streaming vs batch processing therefore changes the execution model itself. One is designed around controlled execution cycles. The other is designed around perpetual computation.

Why the distinction matters more in 2025+ architectures

The difference between these models matters more today because data pipelines are no longer isolated analytics systems. They power automation, trigger workflows, and feed operational decisions. As more architectures shift toward asynchronous and event-driven communication models, streaming processing becomes embedded in how services interact, not just how analytics are generated.

At the same time, governance and cost pressures have intensified. Organizations cannot simply default to streaming everywhere. Continuous systems require continuous compute, stronger observability, and more sophisticated state management. When teams underestimate this shift, streaming vs batch processing decisions become operational liabilities rather than accelerators.

The pressure for immediacy is real, but so is the need for stability.

Why most organizations end up using both

In practice, streaming vs batch processing is rarely a binary decision. Most mature data platforms combine both models deliberately.

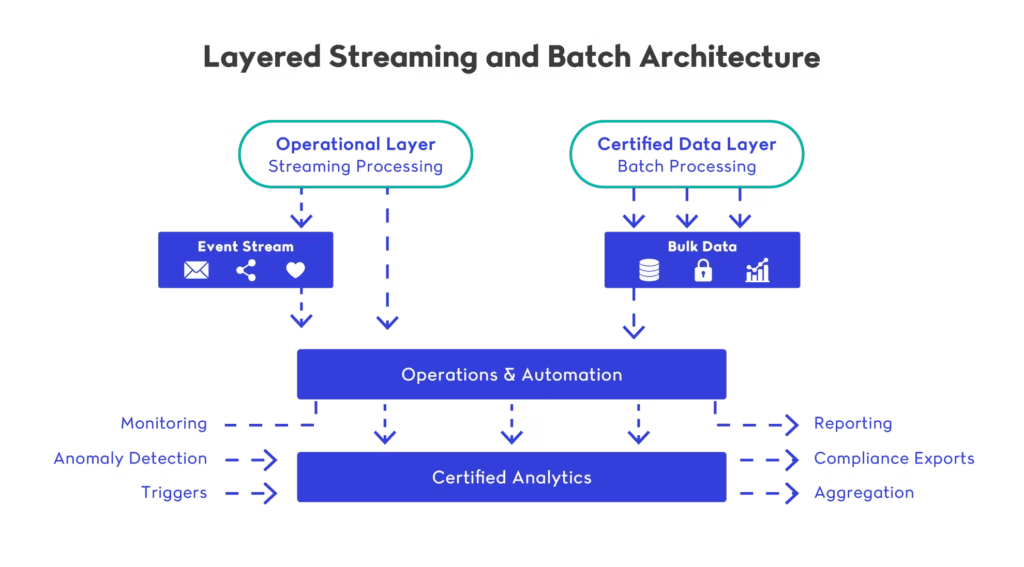

Streaming processing supports operational responsiveness: event-triggered workflows, anomaly detection, personalization, and monitoring. Batch processing supports stability and trust: reconciled reports, compliance datasets, and long-term historical analysis.

Industry architecture patterns increasingly reflect this layered approach, where real-time ingestion feeds operational systems while scheduled consolidation produces authoritative analytics datasets.

A useful mental model is this: streaming systems optimize for reaction speed, while batch systems optimize for data confidence. The most resilient architectures balance both rather than forcing a single model across all workloads.

What this layered view makes clear is that streaming vs batch processing is not simply a technical optimization. It is a structural decision about where immediacy belongs in your architecture and where stability must dominate. Once that distinction is explicit, the conversation shifts from abstract debate to practical evaluation: which workloads truly require continuous processing, and which ones are better served by controlled execution windows?

Framed this way, streaming vs batch processing becomes a question of continuous operation versus controlled certification.

When Streaming Processing Is the Right Choice

Workloads where latency directly creates business value

Streaming processing is the right architectural choice when the value of data decays quickly. If an event is only useful for a short period of time, delaying it by hours through batch processing effectively destroys the business value.

This is the clearest way to evaluate streaming vs batch processing in enterprise environments: not by asking whether real-time is possible, but by asking whether real-time is necessary. Fraud detection is the obvious example. A fraudulent transaction discovered 12 hours later may still be useful for reporting, but it is no longer useful for prevention. This is why cloud providers explicitly position streaming architectures for time-sensitive use cases such as monitoring, personalization, and anomaly detection.

The same applies to operational monitoring. If a system anomaly is detected after a batch window closes, the damage is already done. Streaming processing supports alerting, escalation, and automated mitigation while the issue is still unfolding. In these scenarios, the architecture must support continuous ingestion and processing rather than scheduled execution.

A practical rule is that streaming processing is justified when the output triggers action. If the output only informs retrospective analysis, batch processing is usually sufficient.

Streaming patterns that scale (and ones that don’t)

Streaming processing scales best when it is treated as an event pipeline, not as a real-time data warehouse. Patterns such as filtering, enrichment, routing, and lightweight aggregations are ideal because they keep state manageable and failure recovery predictable.

Modern stream processing engines emphasize state management, checkpointing, and fault tolerance because continuous systems must survive failures without stopping the flow of data. This architectural requirement is what distinguishes streaming processing from simply running smaller batch jobs more frequently.

In practice, streaming processing becomes risky when teams attempt to replicate heavy batch-style transformations in a continuous environment. Large joins, extended historical lookbacks, and deeply layered business rules increase memory pressure and recovery complexity. These are usually better handled in downstream batch processing layers where deterministic validation is easier to enforce.

For a deeper look at practical design tradeoffs in real-time architectures, you can explore modern streaming pipeline patterns discussed in enterprise engineering contexts.

Common failure modes in streaming-first systems

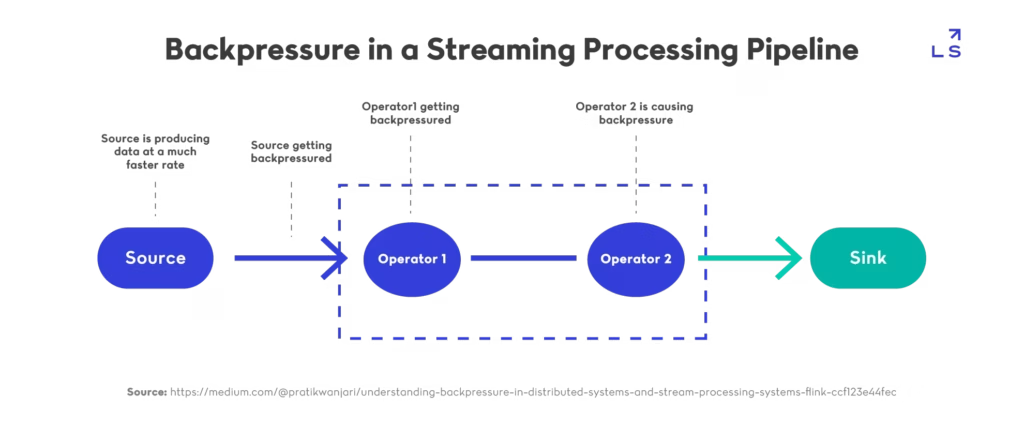

Streaming processing introduces failure modes that batch pipelines rarely encounter. The most common is backpressure: incoming events exceed processing capacity, and lag accumulates until outputs lose operational value. This behavior is well documented in streaming platform design discussions, where throughput and latency must be balanced carefully.

Another failure mode is silent correctness drift. Streaming systems often continue running even when data quality issues occur. Duplicate events, missing events, or incompatible schema changes can gradually corrupt outputs while dashboards still appear active.

This is why streaming vs batch processing is also an observability and governance decision. Continuous systems require explicit monitoring of lag, state size, and delivery guarantees. Without mature operational practices, real-time platforms can become complex infrastructure layers that are fast but not trusted.The practical lesson is that streaming vs batch processing should be decided by whether the output triggers immediate action.

When Batch Processing Is Still the Best Architecture

Batch is still the default for governance-heavy enterprises

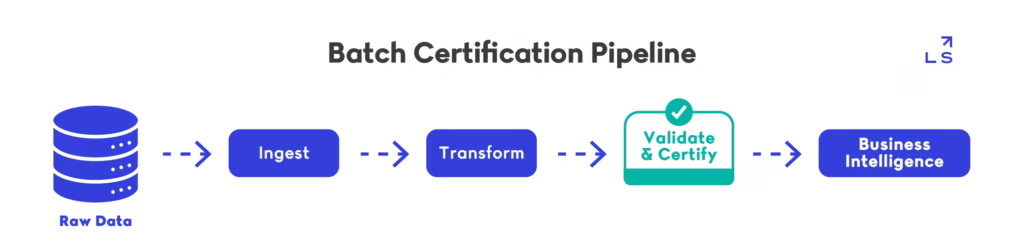

Batch processing remains the default architecture in most enterprises because it aligns with how organizations define trust. In highly regulated industries, “correct” often matters more than “fast,” especially when data is used for financial reporting, compliance submissions, or audit-grade decision making.

This is one of the most overlooked realities in streaming vs batch processing decisions. A real-time system may provide fast visibility, but if stakeholders cannot reconcile the output, they will not treat it as authoritative. Batch processing supports repeatability and validation because results are generated from fixed inputs, over a fixed window, with controlled execution.

In practical terms, batch processing is not just a technical method. It is an operating model that matches governance requirements: predictable datasets, scheduled publication, and traceable lineage.

Cost control and predictability at scale

Batch processing is also easier to control financially. Jobs run at scheduled intervals, compute can be scaled aggressively during execution, and then shut down. This makes it easier to forecast infrastructure costs, especially in cloud environments where streaming systems can introduce persistent baseline spend.

This cost dynamic becomes a major factor when streaming vs batch processing is evaluated at platform scale. Running continuous processing for every dataset often leads to high operational overhead without proportional business value. Batch processing allows organizations to prioritize compute resources only when necessary, often using scheduling and orchestration layers that can be optimized over time.

The ability to run heavy transformations in isolated windows is one reason batch workloads remain central to large-scale analytics architectures described in modern data platform guidance.

The hidden advantage: deterministic replay and validation

One of batch processing’s strongest advantages is replayability. When something goes wrong, teams can rerun the job with the same inputs and validate outputs before publishing. This deterministic behavior makes batch processing ideal for pipelines where correctness is more important than immediacy.

By contrast, streaming systems are often harder to replay cleanly. They may require offset rewinds, state restoration, or reprocessing large volumes of historical events, which introduces operational risk. For many enterprises, streaming vs batch processing is decided by auditability, replay, and publication control rather than speed.

This is why streaming vs batch processing decisions often come down to how much the organization can tolerate uncertainty in the short term. Batch processing is structurally aligned with certification workflows: quality checks, reconciliation logic, and publish gates. These practices map naturally to enterprise expectations of what “trusted data” means.

For organizations building analytics products, batch processing also supports clearer contracts between teams. Data is published at known times, in known formats, and downstream consumers can design around stable delivery schedules. This aligns closely with modern data product thinking where reliability and governance are treated as first-class requirements.

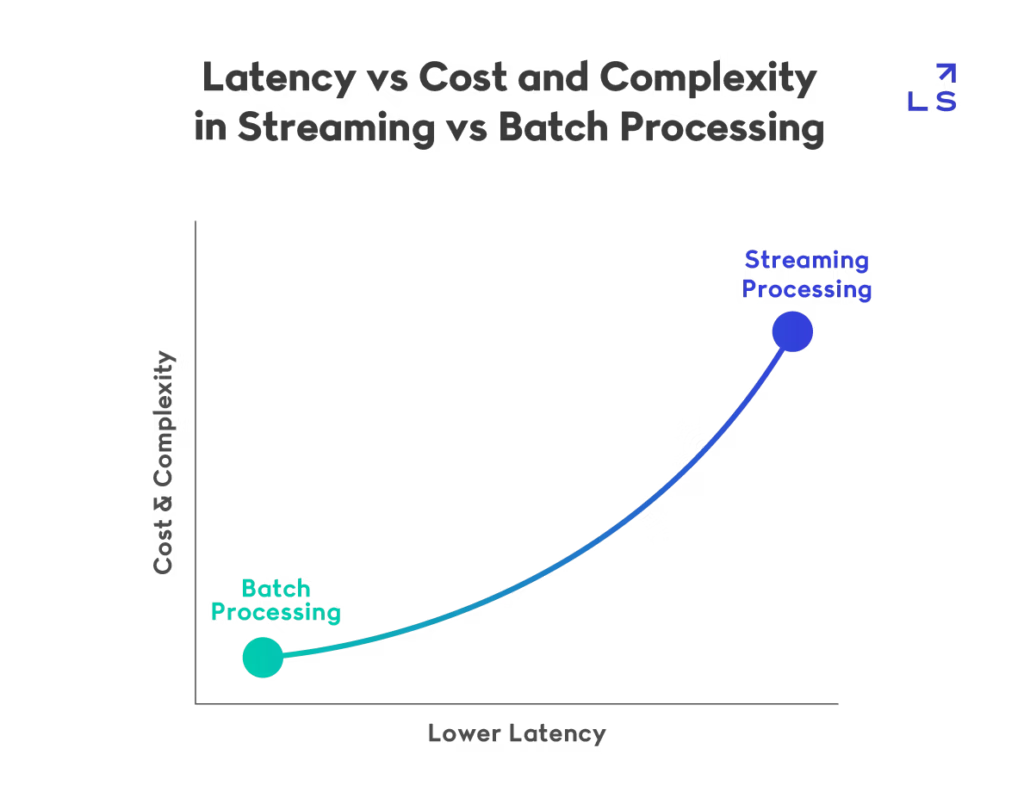

The Real Tradeoff: Latency, Cost, and Operational Complexity

Why “real-time” is rarely free

Streaming vs batch processing is often framed as a performance decision, but in enterprise environments it is closer to an operational economics decision. Real-time processing reduces latency, but it forces the organization to treat data processing as a continuously running system rather than a scheduled workload.

Batch processing pipelines can fail, rerun, and recover in controlled cycles. Streaming systems must remain available at all times, which means failures become production incidents rather than delayed job completions. This is why “real-time” is rarely a simple upgrade. It changes the entire reliability model.

This shift is reflected in how streaming platforms define their core value: fault tolerance, replayability, and state management become first-class concerns rather than implementation details.

Where cost explodes in streaming platforms

The cost risk in streaming systems is not only infrastructure. It is also platform complexity. Streaming architectures require persistent compute, durable state storage, continuous monitoring, and more advanced operational practices. Unlike batch workloads, which can often be scaled up and shut down after execution, streaming systems must remain provisioned even during low traffic periods.

Streaming vs batch processing decisions therefore become cost decisions at multiple layers:

- baseline infrastructure cost

- operational cost of observability and incident response

- engineering cost of maintaining continuous correctness

- long-term platform cost of schema evolution management

This is why many teams over-invest in streaming early. They optimize for immediacy without recognizing that the long-term cost is the operating model, not the throughput.

Cloud architecture guidance frequently frames streaming adoption as an architectural tradeoff because continuous ingestion creates permanent platform responsibility rather than temporary workload responsibility.

The people cost: who has to operate the system

The most underestimated cost in streaming vs batch processing is organizational. Streaming systems require clear ownership boundaries. Someone must be accountable for lag, throughput, schema drift, delivery guarantees, and replay procedures. Without defined ownership, real-time systems degrade quietly until they become untrusted.

Batch processing is more forgiving because the system has natural checkpoints. A failed batch job is visible, isolated, and usually recoverable through reruns. Streaming failures can be partially hidden: outputs continue flowing, but correctness can degrade or become delayed.

This is also why streaming platforms push organizations toward stronger DevOps maturity. CI/CD practices must be more robust, rollback strategies must be defined, and monitoring must cover not just uptime but correctness indicators. Mature pipeline automation and deployment practices become foundational when streaming workloads are treated as always-on production systems.

In other words, streaming vs batch processing is not only a technology choice. It is a decision about whether the organization is ready to operate continuous systems with production-grade accountability. At scale, streaming vs batch processing is an economic tradeoff where lower latency typically requires higher operational complexity.

Data Consistency and Correctness: What Changes Between Streaming and Batch

Exactly-once vs at-least-once in practice

One of the most misunderstood dimensions of streaming vs batch processing is not speed — it is correctness. How a system guarantees delivery, ordering, and state consistency determines whether outputs can be trusted in production environments.

Batch processing operates on complete datasets. Because the input is bounded, results can be recalculated deterministically. If a job fails, it can be rerun against the same data, producing identical outputs. This deterministic behavior makes batch processing easier to certify, reconcile, and audit.

Streaming processing, by contrast, deals with continuous input. Events may arrive out of order, duplicated, or delayed. Modern stream processors provide mechanisms such as checkpointing and state persistence to achieve strong guarantees, but the guarantees depend on correct configuration and disciplined operations.

This is where streaming vs batch processing becomes a trust question. Batch systems guarantee correctness by design. Streaming systems guarantee correctness through coordination and state management.

Late-arriving data and event-time complexity

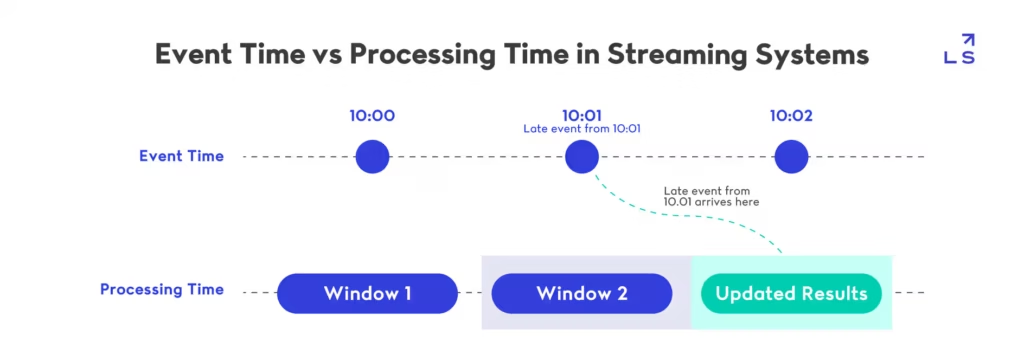

In batch processing, time is simple. Data collected during a window is processed as a unit. In streaming processing, time becomes multidimensional. There is event time (when something happened) and processing time (when the system sees it).

Late-arriving data complicates this further. A transaction that occurs at 10:01 may not reach the system until 10:07. Streaming systems must use windowing strategies and watermarking to decide when results are considered complete, which introduces conceptual complexity into the architecture.

When evaluating streaming vs batch processing, this distinction matters. Batch systems can wait for completeness before computing. Streaming systems must decide how long to wait before publishing intermediate results.

This is where streaming vs batch processing becomes a trust decision, because correctness is finalized differently in continuous and scheduled systems.

This is why stakeholders sometimes perceive streaming dashboards as unstable. Numbers may change as late data arrives and windows close. Without clear communication, this behavior can erode trust in real-time systems.

Why batch expectations break streaming dashboards

Enterprise stakeholders are accustomed to batch outputs that do not change once published. A monthly financial report is not expected to update retroactively every few minutes. Streaming systems challenge that expectation.

In streaming vs batch processing discussions, this cultural gap is often ignored. Teams implement streaming pipelines assuming that faster data automatically improves decision-making. In reality, decision-makers may struggle with continuously shifting metrics unless the organization defines clear expectations about provisional versus certified results.

One effective hybrid pattern is to use streaming processing for immediate visibility while reserving batch processing for authoritative outputs. Real-time dashboards show what is happening now, while batch-certified datasets confirm what has officially occurred. This layered approach is increasingly common in modern data platform guidance.

The deeper lesson is this: streaming vs batch processing changes not only system design, but also how organizations define truth. Speed introduces ambiguity. Structure introduces certainty. The architecture must reflect which of those qualities matters most for a given workload.

Choosing Streaming vs Batch Processing by Use Case

A practical decision model

The fastest way to make streaming vs batch processing decisions is to stop thinking in terms of technology and start thinking in terms of time sensitivity. Not every workload benefits from real-time execution, and many organizations overestimate how often immediate processing actually changes outcomes.

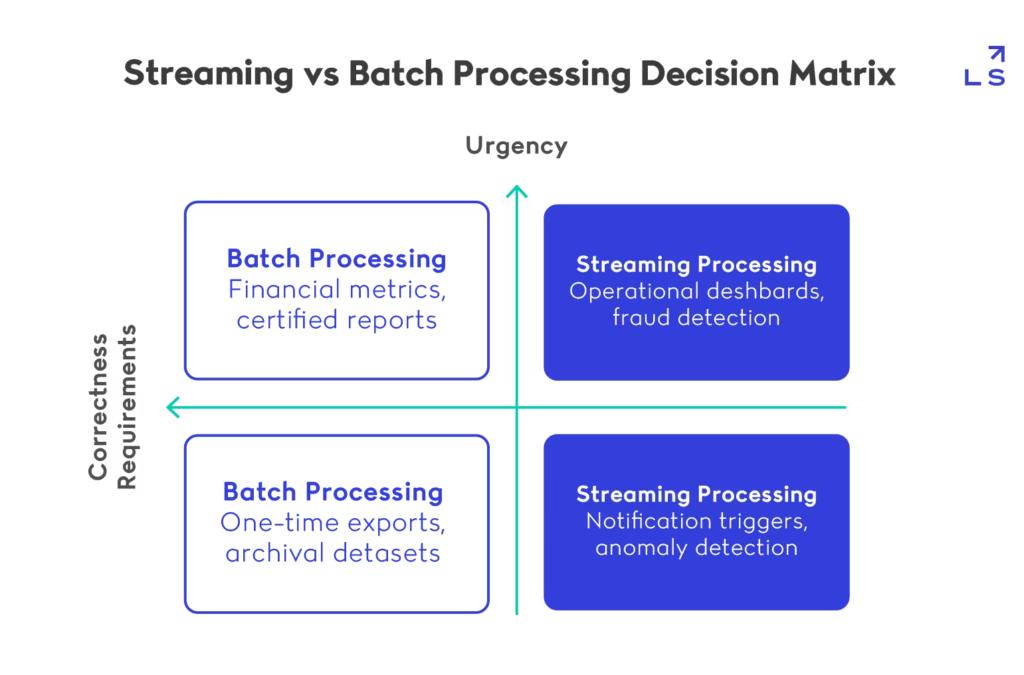

A practical model is to evaluate each pipeline using three questions:

- Does the value of the data decay quickly?

- Does the output trigger action or only inform reporting?

- Is the business comfortable with provisional results?

If the answer is yes to the first two, streaming processing is often justified. If the workload primarily supports analytics, reconciliation, or compliance, batch processing is usually the correct foundation.

This framing keeps streaming vs batch processing grounded in business value rather than architectural ambition.

This matrix makes the streaming vs batch processing decision explicit: workloads that demand immediate action and tolerate evolving results lean toward streaming, while workloads that prioritize accuracy and certification belong in batch pipelines. The key is aligning architectural choice with business expectations rather than technical preference.

Used consistently, streaming vs batch processing becomes a repeatable classification method instead of an opinionated architecture argument.

Recommended enterprise workload mapping

Once the decision model is clear, streaming vs batch processing becomes easier to map to real enterprise workloads.

Streaming processing is best suited for:

- fraud detection and risk scoring

- system monitoring and anomaly detection

- user behavior tracking that powers live personalization

- operational alerts and automated escalation workflows

- event-triggered automation in distributed systems

These use cases align with the idea of stream processing as continuous reaction to events, which is how modern platforms position real-time analytics.

Batch processing is best suited for:

- finance reporting and revenue reconciliation

- compliance exports and audit datasets

- large-scale aggregation and historical trend analysis

- machine learning training datasets

- certified BI metrics and executive dashboards

These workloads benefit from deterministic computation, controlled publication schedules, and repeatable reruns.

For most enterprises, streaming vs batch processing ends up splitting along a simple line: streaming drives operational responsiveness, while batch protects trusted business truth.

The “real-time temptation” problem

The most common mistake organizations make is assuming that real-time is always better. Once a company sees streaming dashboards or event-driven automation in action, there is a tendency to demand streaming pipelines for every domain.

This is where streaming vs batch processing decisions become dangerous. Real-time systems introduce continuous operational responsibility. They also introduce complexity around late data, ordering, and correctness guarantees. If a workload does not require immediate action, streaming processing can become an expensive engineering theater.

A healthier strategy is to treat batch processing as the default and justify streaming only when latency creates measurable business value. This is also why modern architectural guidance tends to position streaming systems as an optimization layer rather than the baseline architecture.

In practice, teams succeed when they define clear boundaries: which metrics are “live,” which datasets are “certified,” and which outputs are allowed to change after publication. That clarity is what makes streaming vs batch processing sustainable at enterprise scale.

The Hybrid Reality: Most Enterprises Need Both

The hybrid reference architecture that works

In most real-world environments, streaming vs batch processing is not a binary decision. It is a structural design problem: how do you combine immediacy with certification without duplicating logic or creating competing sources of truth?

The most resilient hybrid architectures begin with durable ingestion. Data is captured once, stored reliably, and made available for multiple downstream processing models. Streaming processing then produces fast operational outputs such as anomaly flags, alerts, or near-real-time dashboards. Batch processing runs on scheduled cycles to recompute, reconcile, and publish certified datasets for reporting, governance, and long-term analytics.

This layered model is increasingly reflected in modern enterprise data engineering patterns, where real-time pipelines coexist with curated and validated datasets rather than replacing them. In mature platforms, streaming vs batch processing is implemented as two layers over the same ingestion backbone, not two competing pipelines.

Streaming for action, batch for truth

A useful way to simplify streaming vs batch processing in hybrid environments is to define two explicit layers:

- Action layer (streaming processing)

Built for responsiveness: triggers, monitoring, personalization, automation. - Truth layer (batch processing)

Built for stability: reconciled reporting, compliance datasets, historical aggregates.

Streaming outputs are optimized for speed, but they may change as late events arrive or as state is corrected. Batch outputs are optimized for correctness and repeatability. Hybrid architectures work when stakeholders understand this separation and when both layers are designed intentionally rather than emerging accidentally.

In other words, streaming vs batch processing becomes manageable when the organization stops expecting one layer to serve both purposes.

Reconciling the two layers without chaos

Hybrid architectures break down when streaming and batch systems diverge without governance. If dashboards show one number and certified reports show another, trust collapses quickly.

To prevent this, mature architectures introduce explicit reconciliation mechanisms:

- shared ingestion standards and schema contracts

- replay capability for recomputation

- validation checkpoints before publishing certified datasets

- automated drift detection between streaming aggregates and batch recomputations

These practices are especially emphasized in data governance and enterprise analytics guidance, where accuracy and consistency must remain enforceable even as pipelines scale.

Ultimately, streaming vs batch processing is not about choosing which model is “better.” It is about assigning roles. Streaming supports action. Batch supports certification. Hybrid systems succeed when that boundary is explicit, measurable, and operationally enforced.

Platform Engineering Implications: Streaming Changes the Operating Model

Continuous systems require continuous observability

One of the most profound differences in streaming vs batch processing is not technical — it is operational. Batch systems operate in cycles. Streaming systems operate continuously.

In batch processing, observability is usually binary: the job ran successfully or it failed. Failures are visible and isolated. In streaming systems, pipelines may continue running while producing delayed, incomplete, or inconsistent outputs. A green status indicator does not guarantee correctness.

This shifts observability from “job health” to “system health.” Monitoring must include:

- consumer lag

- throughput stability

- state growth

- delivery guarantees

- schema compatibility

- replay safety

Streaming vs batch processing therefore changes what reliability means. In streaming architectures, correctness must be continuously measured rather than periodically validated.

This shift is precisely why platform engineering becomes central in streaming vs batch processing decisions: continuous systems require built-in observability, release safety, and governance capabilities rather than ad hoc operational fixes.

Modern distributed systems guidance increasingly treats observability as a product requirement rather than a debugging tool, especially in event-driven environments.

Release management becomes a risk surface

Batch deployments are relatively contained. A failed transformation can often be corrected and rerun without affecting downstream systems immediately.

Streaming deployments are different. Changes apply instantly. Incorrect logic, incompatible schema updates, or state mismanagement can propagate across services within seconds. Rollback is possible, but stateful streaming systems may require replay or migration before full recovery.

This is why streaming vs batch processing is also a release engineering decision. Streaming platforms demand:

- schema versioning discipline

- backward compatibility enforcement

- canary deployment strategies

- automated validation before full rollout

- defined replay procedures

Without these controls, streaming systems amplify small mistakes into platform-wide incidents.

Deployment safety patterns such as staged rollouts and rollback-first design are widely recognized as critical for continuous delivery in distributed systems.

Ownership and governance boundaries must be explicit

Batch pipelines are often owned by analytics or data teams. Streaming pipelines frequently cross product, platform, and infrastructure boundaries. Producers and consumers may be deployed independently, and event schemas can become implicit contracts between teams.

In streaming vs batch processing decisions, this introduces a governance challenge. Who owns the event schema? Who approves breaking changes? Who monitors downstream lag or drift?

Without explicit ownership boundaries, streaming systems accumulate hidden dependencies. Over time, these dependencies create fragility that is difficult to diagnose.

In mature organizations, streaming processing is treated as a platform capability rather than a collection of isolated pipelines. That means:

- centralized schema governance

- documented event contracts

- automated compatibility checks

- clear incident response ownership

- lifecycle management for topics and consumers

Ultimately, streaming vs batch processing changes the operating model because it transforms data pipelines into continuously running distributed systems. The organization must be prepared to operate them as such. Platform engineering is what makes streaming vs batch processing operable at scale by standardizing observability, contracts, and release safety.

What Streaming vs Batch Processing Really Determines in Enterprise Data Platforms

In most organizations, streaming vs batch processing is treated as a technical architecture decision. But at scale, it becomes a governance decision: what counts as “real-time truth,” what counts as “certified truth,” and who owns the boundary between them.

The reason streaming vs batch processing keeps showing up in enterprise data strategy is that it forces teams to define how much inconsistency they are willing to tolerate in exchange for speed. Streaming pipelines optimize for immediacy, but they also introduce operational overhead: state management, schema evolution risk, and the constant need for monitoring. Batch pipelines optimize for certification, but they introduce delays that can weaken responsiveness.

For leadership teams, the real challenge is not picking one model. It’s designing a system where streaming vs batch processing coexist without producing contradictory outcomes. That requires explicit rules around publication, validation gates, and reconciliation processes. Without those rules, teams end up with dashboards that no one trusts and reports that arrive too late to matter.

In practice, the companies that succeed treat data architecture as a managed operating model. That often means introducing centralized service oversight, vendor coordination, and performance metrics around data pipelines—especially when multiple teams or providers are involved. This is exactly where IT Services Management becomes relevant: not as a technical layer, but as the control plane that ensures outsourced or distributed execution stays aligned with internal standards, SLAs, and continuous improvement cycles

It also means scaling delivery capacity without losing collaboration quality. For many teams, nearshore delivery models make streaming vs batch processing implementation feasible because they enable real-time collaboration and team extension without long hiring cycles

And when organizations need fast access to specialized engineers—data, DevOps, or platform roles—IT outsourcing can be the practical mechanism that accelerates execution while keeping selection and technical alignment under client control

Ultimately, streaming vs batch processing is not about picking a winner. It’s about designing a system that supports fast operational decisions while still producing certified, auditable truth. Enterprises that treat that boundary as a product—rather than an accident—build data platforms that scale cleanly into 2026 and beyond.